On mathematics anxiety

Explore the prevalence and impact of mathematics anxiety, especially on girls, and the challenges in treating it. Learn about potential solutions and...

How to use research to more effectively and appropriately assess different approaches to teaching reading.

By Pamela Snow

It seems that barely a week goes past in which there is not some kind of debate in social or mainstream media about education and its use of evidence. On one side of this debate, we see calls for the higher visibility of evidence-based (or evidence-informed) practice (disclaimer: I am one of those voices) in teacher pre-service education and everyday practice, while in others, we see counter-claims, sometimes framed in postmodern parlance, to the effect that education should be afforded a special place in the professional accountability shadows, because what goes on in classrooms is “too complex”, or because teaching is more of a je ne sais quoi craft that can be neither usefully studied by researchers nor effectively taught to pre-service teachers. Both of these extremes are problematic for practising teachers, particularly those at the start of their careers.

It is true that classrooms (and schools more generally) are extremely complex places. They may comprise students from a range of ethnic, cultural and socio-economic backgrounds, not to mention the variability that exists with respect to prior knowledge, life experience, and aptitude for learning. This complexity is, however, just a microcosm of the communities in which schools exist, in the same way in which hospitals, shopping centres, and public transport hubs are microcosms of the wider communities in which they are located. They all have a degree of formality and structure, of predictability and routine, but all are subject to the vagaries and surprises (pleasant and otherwise) of human nature, and the complex phenomena that occur when humans interact with each other.

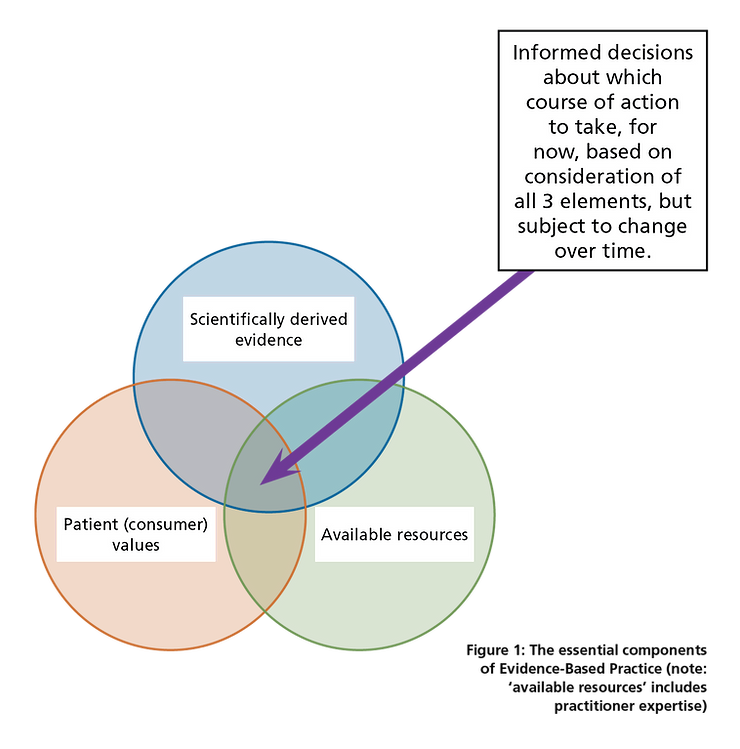

The notion of evidence-based practice (EBP) has its origins in clinical medicine, most notably in the work of a small number of UK-based physicians, such as Sackett, Muir Gray, Cochrane, and others (you can read more about its history here). Importantly, EBP was never only about empirically (scientifically) derived research evidence. From the outset, its advocates emphasised the equal importance of patient values and available resources (which takes in individual practitioner expertise), as can be seen in Figure 1. So, while EBP has never been only about published research evidence, evidence-based decision-making must include reference to this for the term evidence-based

to be used.

Multiple meanings attach to the term ‘evidence-based practice’

It is important to acknowledge that in many classrooms, ‘evidence-based practice’ refers to the collection of data on the performance of individual students, so that teachers can (a) monitor their progress and (b) make educational adjustments, such as the provision of extra supports if needed. This take on EBP is neither better nor worse than what health researchers mean when they use the term, but it is certainly different, and different in key ways.

In medicine, for example, doctors refer to ‘diagnostic information’, or simply ‘test results’ to refer to data on individuals, where evidence-based practice is the much bigger-picture lens through which their overall approach to practice is informed, and (importantly) changes over time. I think this terminology difference has often been unrecognised in education debates and has been an invisible source of some cross-purpose frustration.

This should not imply, however, that the only important distinction is between data that are collected in the course of everyday practice and empirically derived published research. There’s another important point of differentiation that needs to be considered in relation to empirically-derived research data, and that is the level of evidence it provides for or against a particular approach. Before we look closely at levels of evidence, though, we need to be mindful of where a study is published.

In general terms, the basic quality hurdle that a teaching approach or therapeutic intervention has to clear, is publication in the peer-reviewed literature. Peer-reviewed journals are generally published by esteemed professional bodies and are staffed by an Editor-in-Chief who oversees an Editorial Board. Some professional bodies are of higher status than others, and this will impact on the degree of intellectual rigour in their publications, as well as the proportion of submitted manuscripts that make it through the peer-review process to publication. Peer-review, as the name suggests, means that a manuscript is read and closely critiqued by academic counterparts (peers) with relevant expertise, and typically this occurs in a double-blind fashion, i.e., the reviewer is not told the name(s) of the study author(s) and the authors in turn, are not told who conducted the review. There are pros and cons to this, but they are beyond the scope of our focus here.

So, knowing that a study was published in the highly-ranked Review of Educational Research, rather than the (fictitious) Acme Education Research Club Quarterly, is, itself, an indicator that goes some of the way to reassure readers about the quality assurance processes it has been through. Being published in a high-ranking journal is reassuring, but it is not enough on its own to signal to readers that we should change our practice on Monday morning based on its results. We also need to consider the level of evidence attached to an approach. If you are reading the first paper ever about a new approach to supporting children with reading problems, for example, you will be interested and open-minded about the findings, but may rightly exercise caution in changing your practice, until such time as further confirmatory evidence begins to emerge. You will also be interested in the strength of the study designs in published studies and degree to which extraneous variables (e.g., concurrent exposure to other interventions; level of bias in the nature of the sample) have been well-controlled, as well as wanting to know how well initial gains made were maintained over time (amongst other questions). As the body of research on an approach builds, we are wanting to see a range of study designs employed, perhaps starting with case studies, working up to cross-sectional and group comparisons, to interrupted time-series, and then randomised controlled trials. Most introductory social sciences research methods texts explain the ins and outs of these study approaches.

Is it ethical to conduct randomised controlled trials (RCTs) in education? My answer to this is yes, and to support this view, I will quote the position of Sackett et al. (1996) in relation to the use of RCTs in health:

Because the randomised trial, and especially the systematic review of several randomised trials, is so much more likely to inform us and so much less likely to mislead us, it has become the “gold standard” for judging whether a treatment does more good than harm. However, some questions about therapy do not require randomised trials (successful interventions for otherwise fatal conditions) or cannot wait for the trials to be conducted. And if no randomised trial has been carried out for our patient’s predicament, we must follow the trail to the next best external evidence and work from there.These wise words point to the fact that we are not always able to refer to high levels of published evidence to support our practice. So, practitioners in education, as in health, need to take a probabilistic approach to their practice, recognising that as the published research evidence changes, so too may their practice need to be amended.

I would argue that in many cases it is unethical not to carry out RCTs in education, as we are all subject to a range of cognitive biases (e.g., confirmatory bias, the sunk-cost fallacy, and attribution errors) that blindside us to the limitations of our current approaches, and the potential benefits of changing our practice. Readers might be surprised to know that in some RCTs, the business-as-usual control group fares better than the group allocated to receive the new treatment, even though researchers were, in some cases, doing the RCT in the hope of demonstrating the superiority of a new approach over business-as-usual. You can read more about this in Andrew Leigh’s fascinating 2018 book Randomistas.

Are the winds of changing blowing?

Classroom teachers have traditionally been at a long arm’s length from the conduct, critical appraisal, and everyday use of education research. Pre-service teachers have long sat in education faculty lecture theatres (and more recently engaged via online university platforms) to be taught the worldview of Piaget, Bruner, Vygotsky, and Dewey (to name a few) as positions of fact, and then completed teaching rounds (practicum) to learn (if they are lucky) the craft part of managing a classroom and the work of actually teaching content to students. The notion that there is an entire industry beavering away in the background, across a range of disciplines, striving to improve the body of knowledge on which classroom practice is built, was not, according to the many teachers with whom I engage, ever discussed.

Happily, that is changing, albeit slowly. The advent of Twitter has provided a lively, multi-disciplinary platform on which classroom teachers can engage with university researchers across a range of disciplines. Further, university academics are rightly under more pressure than ever before by funding bodies and governments to demonstrate the relevance and everyday applicability of their research. Where only a decade ago, academic articles withered in hard copy on the shelves of university libraries, now they are tweeted and shared via Facebook, ideally in open access (i.e. non pay-walled) formats so that anyone can access them and pass judgement on their merit.

This is generally a good thing; however, its true value can only be realised if teachers are equipped, during their pre-service education, with a solid grasp of research methodology and the tools of critical appraisal, so they can be astute consumers of new research across their working lives, and make judgements about how to modify their practices accordingly. As observed by Dr Louisa Moats, reading education suffers from a “lack of rigour and respect for evidence” (p. 12). This has created the paradoxical, but unacceptable impasse in which it is extremely difficult to translate cognitive science findings into classroom practice, but any number of pseudoscientific fads and fashions have been uncritically and enthusiastically installed in classroom processes.

As a community, we entrust one of our most precious resources to teachers for the better part of their waking hours, over the majority of the year. Teacher wellbeing and student outcomes hinge on having the best available knowledge and tools at teachers’ disposal, as well as the skills to modify practice over time as the research evidence evolves, irrespective of the extent to which new findings affirm or challenge our prior assumptions and beliefs. This is a tall order for us all.

This article was published in the October 2019 edition of Nomanis.

Professor Pamela Snow is Head of the La Trobe Rural Health School, at the Bendigo campus of La Trobe University. She is both a psychologist and speech pathologist and her research interests concern early oral language and literacy skills, and the use of evidence to inform classroom practices. In January 2020, Pamela is taking up a new appointment as Professor of Cognitive Psychology in the School of Education at the Bendigo campus of La Trobe University.

This article first appeared in the June 2019 InSpEd newsletter.

Explore the prevalence and impact of mathematics anxiety, especially on girls, and the challenges in treating it. Learn about potential solutions and...

Is cognitive load theory facing a significant challenge from Alfie Kohn? Dive into the debate surrounding Kohn's critique of cognitive load theory...